Finding Demo: A Journey to Demonstrate Document Q&A with LLMs

Charting the Course

LLMs and their associated chat bots are very popular. Finding use cases for them as a company, or as an engineer looking to get more involved in the AI space, can prove pretty daunting.

As I’ve written about recently, I’ve been focused on educating myself with the technologies, foundations, and practical uses of LLMs on a more professional level. Taking some of the examples from training courses as well as Google’s ever evolving Generative-AI GitHub Repo I wanted to find a solution that might drive interest in utilizing LLMs in a company.

Embarking on the Demo

I wanted to approach this demo with few goals in mind. This demo should be easily repeated, easily deployed and understood, cheap to host and run, and impactful to the client.

Going through a list of common starter Generative AI demos I settled on a document Q & A demo — where the documents could be pre-loaded or loaded at the time of demonstration and questions could be answered in real time.

The set of documents I’d be using is a collection of publicly available PDFs of catalogs across a set of products for a company.

I intend to share with you the four paths I took and hopefully will help you find something that might help you in your journey.

Leaving the Dock with Jupyter Notebooks and Vertex AI

Jupyter Notebooks are popular platforms to develop data science tool kits, demonstrations, and generally get your feet wet with concepts that you can share easily. For those unfamiliar with Jupyter Notebooks, Bard gives this tidy summary:

Jupyter notebooks are interactive documents for data exploration.

They mix code, text, and visuals, like interactive playgrounds.

You can run code, see results, and adjust, making it ideal for learning.

They’re open-source, work with many languages, and are great for sharing findings.

Think “interactive data playground” and you’ve got Jupyter notebooks!

Having used plenty of Jupyter Notebooks during labs within the Google Cloud Skills Boost paths, I thought it would be a great first step.

Knowing that I’d focus on Google products I started with a Vertex AI Workbench, a customizable hosted instance where I could run a Jupyter notebook with easy access to Vertex AI APIs and any other Google Cloud service I could need. A notebook instance could be easily deployed in a Google Cloud Project using gcloud commands, or a simple script. It would be low cost and with the notebook saved within a remote repository it would be quickly ready to use. Knowing that the application in the notebook would be built by the demonstrator or the client, this was a no-brainer.

First I started off very verbose: every function broken into a runnable chunk of code with a description of what was happening or what it did.

Installing dependencies, authenticating the environment, importing the model for text and embedding, setting global variables, loading the documents, extracting text from the documents, chunking the documents, creating the context, and finally submitting the prompt and displaying the answers.

I thought that this experience was great. The potential client could read and understand what was happening, or at the very least the demonstrator would have a script to use as they progressed through the notebook during the demo even if they weren’t that familiar with the technical aspects of it.

Then I realized that maybe simpler was better. We didn’t need to showcase every function, we can condense the functions as they would be in an application, automate as many manual steps as possible, and prompt the user for the question and to kick off the functions to generate the answers. I trimmed the notebook from 46 cells to just 19.

The responses to the questions were consistently good and relatively quick, once I removed some of the restraints applied to prevent overspending.

After presenting it to a small group, I found that this approach might be too technically dense to present to the intended audience. I decided that while it would be appropriate for a technical training session, it wouldn’t serve the audience or the demonstrator in the way I wanted it to. I decided to find something more user friendly and less technically demanding on the user while not abandoning what I’d already developed.

Following the Current from Jupyter to Web App

Essentially the code in the Notebook was an application.

The frontend was the notebook itself, the backend was a local datastore declared in one of the code blocks, and the Vertex AI API was handling the embeddings and answers. All I had to do was separate them into a frontend and a backend and then utilize Google Cloud serverless services to host the application. The setup could be easily repeated and the code hosted in a repository.

Deciding on and hosting an appropriate backend, handling the data ingestion from the documents, and then presenting it all with a simple frontend is non-trivial. Fortunately there are plenty of samples to get started and I was able to start developing the application using the code from my notebook pretty quickly.

I’ll be honest, this was a lot of work for me and by the time I got a working example, I knew it’d be even more work to get it presentable. I didn’t have the luxury of spending all of my working hours on this so when I heard about a newly released option, I took a little detour.

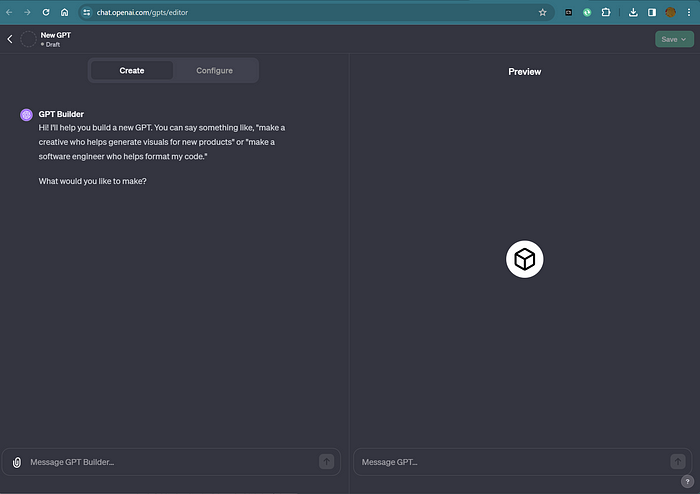

An Excursion with GPTs

Released during the first OpenAI developer days, GPTs are custom ChatGPT chatbots. The OpenAI keynote promised an easy onboarding via a ChatGPT-driven concierge that would prompt you for what you wanted to create and then be fine-tuned using documents and further prompt-refinement.

It was like a sign from Poseidon: GPTs were easy to create, documents were easy to upload and process, and the chatbots were hosted with a familiar frontend. However, it wasn’t all sunny skies and clear waters.

Firstly, at the time of my experience and as of this publishing, in order to use GPTs you have to be a paying member of ChatGPT Plus.

Secondly, the results weren’t great. It seemed to ignore some of the data in the documents, hallucinate on the responses, and despite some time spent fine-tuning the expected behavior I couldn’t generate the kind of results I got from the Jupyter notebook experience.

Thirdly and fourthly, it wasn’t impactful enough to be impressive enough to prompt (heh) further conversation and the amount of time it could take to create a custom GPT for each potential client could be prohibitive.

However, taking this detour allowed me to think outside of what I had been developing. Which led me to, ultimately, what has been the most effective path.

Full Speed Ahead with Vertex AI Search and Conversation

With a simple-is-better mindset I came back to Vertex AI and rediscovered the Search and Conversation, or what used to be called Vertex AI App Builder.

The goal of Vertex AI Search and Conversation is to give you a launchpad to get started with search, conversation, or even recommendations using a supplied datastore. This datastore can be website URLs, a GCS bucket with documents, a BigQuery dataset, or even data from an API.

Setup is simple. For this use case I created a bucket and uploaded the PDFs I wanted to answer questions about. I added that bucket as a Data Store and created my search app, selecting the Data Store (where Vertex AI was already processing the documents and text) that I created earlier.

Options are limited when creating an app. You can decide on longer or more concise answers, decide if you want to be able to ask follow-up questions or search using an image, and if you want the app to autocomplete or accept feedback on its results. I was able to test the questions and answers using a widget within minutes of starting.

While having the search experience in the Google Cloud console was useful, it wouldn’t serve for a demo. Fortunately Google provides the HTML code for adding the widget to your web application. So I pivoted from my custom web app from before, added the widget code to a sparse webpage, and pushed the application to Cloud Run. Suddenly I had a search widget leveraging an LLM that answered questions verbatim from a set of documents. The results were in most cases better, or on par, with what I could get from my experiment with the Jupyter notebook.

The setup is incredibly efficient, it costs little to host since Cloud Run is allowed to scale to zero, the documents stored in a GCS bucket aren’t excessive, and the cost-per-token for interacting with Vertex AI API is minimal.

Ultimately I determined that this method is the best demonstration for our intended audiences and needs.

Disembarking

Through this journey, we explored various paths to building an LLM-powered document Q&A demo. While each approach had its merits, Vertex AI Search and Conversation emerged as the winner for its simplicity, ease of deployment, and impressive results. This solution allows anyone to leverage the power of LLMs for document search and answer questions directly from their content, without needing extensive technical expertise.

- Ready to set sail? Launch your own Vertex AI Search and Conversation demo in minutes. Follow the resources provided in this post to create your custom document-powered dialogue experience.

- Don’t chart your course alone! Join the vibrant LLM community on online forums and platforms to share your experiences, get help with technical challenges, and discover new possibilities.

- Beyond Q&A, delve deeper! Explore the vast potential of LLMs for tasks like summarizing texts, writing different creative formats, and even generating code. Get creative and unleash the power of AI to unlock new heights of productivity and innovation.

By embracing the journey and venturing into the world of LLM-powered document interaction, you can unlock a powerful new tool for your work and unleash the hidden potential within your data. So, set sail, be bold, and let the LLMs guide you to new horizons of possibilities.

Note for Transparency: I leveraged generative AI (ChatGPT and Bard) to create the header image and to assist in the outlining and editing of this blog post. The words, thoughts, and actions are all my own.